Computational Biology Methods and Their Application to the Comparative Genomics of Endocellular Symbiotic Bacteria of Insects

互联网

The emergence of genome information has overwhelmed our efforts to analyze the unexpected amount of data generated during the last two decades. As an example, today (February, 2009), there are 438 complete microbial genomes and 17 in draft in the J. Craig Venter Institute, Comprehensive Microbial Resource website (URL: http://cmr.jcvi.org/tigr-scripts/CMR/CmrHomePage.cgi) considering that this is only a single resource we estimate that the number of completed genomes will be in the order of double that by the end of 2009 with a considerable percentage of these already published in the literature. Already the Entrez Genome project website controlled by National Center for Biotechnology Information (NCBI) reports that on February 3, 2009, 857 genomes are complete, 815 are in draft assembly, and 989 are in progress (http://www.ncbi.nlm.nih.gov/genomes/static/gpstat.html). The number of institutes worldwide with increasing sequencing capacities has been rising at an exponential rate and the first results of analyzing such data have solved old and long debated hypotheses and also have generated breakthrough ideas that have opened new avenues in all fields of genetics and evolutionary biology. However, our ability to cope technically with the amount of generated raw data has become seriously compromised, fueling many initiatives aimed at developing computational tools to analyze genomic and proteomic data. Many of these tools have been developed to perform comparative genomic analyses; each tool has had to face many of the complexities that biologically driven genome remodeling phenomena cause, such as genome duplication, rearrangement, and shrinkage. In this review, we first discuss the different technologies developed to perform genomic and proteomic analyses. We then focus on the importance of the developed tools to study biologically important phenomena such as genome duplication, the dynamics of genome rearrangement, and genome shrinkage that is associated with the intracellular life of bacteria.

Comparative genomic methods are vast in number as well as function. A decision about the best way to do something is often a long and arduous task in this field, a task that has resulted in the design and reengineering of many of the tools that are available. To describe every method in this area of research would be next to impossible, and so, this text will provide a snapshot of what is available for many of the common tasks in comparative genomics. The logical place to start is of course the beginning―genome sequencing, assembly, and closing, then continuing to discuss the intricacies of comparative genomics.

While in the past comparative genomics has concentrated on sequencing single genomes and parts of genomes, current excitement lies with the sequencing of environmental communities. This field of research, entitled metagenomics is fast growing and the current hot topic. Its application is most utilized to characterize unculturable organisms (an estimated 99% of microbes cannot be cultivated in a laboratory environment (1 )), but it has also made it possible to sequence genomes without the problems that are associated with cultures maintained in laboratories (2 ). Metagenomics has transformed the uses of such organisms by allowing the focus to move from those that can be cloned in culture (3 ). Depending on the source of the environmental sample to be subjected to environmental shotgun sequencing, a colossal variation in the number of identified species may result. Just looking at prokaryotes alone, as few as five species were identified in a community sequencing carried out on acid mine biofilm (Tyson et al. (4 )), in contrast, as many as 3,000 species were sequenced from a soil sample taken in Minnesota, USA analyzed by Tringe et al. (5 ). For a comprehensive review of this subject, see (6 ).

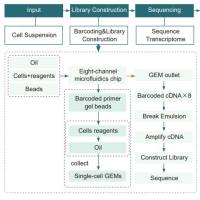

As described above, the whole genome approach where the genome is fragmented into defined length reads is followed by assembly, using purely bioinformatic-based techniques. The second approach, which is more appropriate for larger genomes, utilizes an added step to reduce the computational requirement in assembling the final sequence (Fig. 1 b). Firstly, the genome is broken into larger fragments, which are in a known order; these fragments are then subsequently subjected to sequencing using the normal shotgun approach. This method requires less computational intervention in assembling the reads into the correct order. Information is already known about the order of each subset of reads and thus less error is incurred in the final assembly. Of course, there are disadvantages with each of these approaches. For instance, with the whole-genome approach, there is the uncertainty as to whether the assembly is correct due to the total reliance on bioinformatics tools to join and order the reads; in addition, coverage may be insufficient (i.e., overlap between the fragments). The second approach is time consuming and labor intensive due to the addition of the extra step at the beginning of the protocol (10 ); this approach is also susceptible to incomplete coverage (11 ). Further advances have been made since the advent of shotgun sequencing but the central concepts remain the same.

Technologies currently used in genome sequencing include high-throughput methods such as 454 (12 ), SOLid (Applied Biosciences), and Solexa (13 ). These methods differ from older technologies in their throughput. Hundreds of thousands of DNA molecules at the same time are sequenced instead of a single DNA clones being processed (14 ). The reads returned from each of these technologies are very short; thus, assembly is rather difficult. This disadvantage is offset by the fact that some much DNA is sequenced. The sequencing methodology of these approaches, in particular 454, is called pyrosequencing. This essentially is the sequencing of DNA utilizing the detection of enzymatic activity to identify the bases. This process is termed “sequencing-by-synthesis” (15 ). Future developments will of course increase the length of reads produced by the technologies, as well as the accuracy of the programs with which the fragments are assembled.

Discussion in the past has provided some insight into the pitfalls of each method and perhaps aided in the decision making process (14 , 16 , 17 ). One thing is certain, the higher the coverage the method is able to achieve, the higher the likelihood that the assembly tool will get the correct result and so that in itself should be one of the highest considerations in the decision making process.

After genome sequencing is complete, it then becomes necessary to reconstruct the sequence fragments into a meaningful order that will accurately reflect the original orientation and order of the gene and junk (noncoding regions and pseudogenes) content. The most common and popular manner in which this is achieved is through the Phred (18 , 19 )�PHRAP (20 )�CONSED (21 ) pipeline of tools (all of which originate from the University of Washington).

When assembling sequences from the myriad of reads that encompass a genome, several factors must be accounted for. Firstly, base-calling (the operation of determining the nucleotide base sequence from the chromatograph) must be completed with a minimum of erroneous interpretations of the chromatograph. The nucleotide sequence is determined for each read by the base-caller; the assembler then is utilized to piece the reads together into their original order, but must account for insertions, deletions, rearrangements, inversions, and sequence divergence in doing so. In particular, these events are important when assembling using a comparative method (i.e., using the scaffold of an existing genome to predict the locations of the fragments in the newly sequenced genome). No assembler (to date) proposes to handle all of these complications successfully but some do claim to be more capable than others under certain circumstances. For example, Pop et al. (22 ) reported that PHRAP (20 ) is more adept at creating long contigs (collection of contiguous pieces of DNA (reads)) than other available methods such as TIGR Assembler (23 ) or Celera Assembler (WGS-Assembler) (24 ). This can be valuable and has been used in the past as an indication of the success of an assembler. More recently, it has been reported that a reduction in the length of contigs across the assembly is an acceptable outcome if the error rate is reduced (25 ). Probably the most widely used base-calling algorithm is implemented in Phred (18 , 19 ). Others include GeneObject (26 ) and Life-Trace (27 ).

PHRAP has been widely adopted as an integral component of assembly pipelines such as implemented by Havlak et al. (28 ) in the Atlas Genome Assembly System and Mullikin and Ning (29 ) in the Phusion Assembler. It is considered the standard way in which to assemble smaller genomes with larger genomes relying on more complex algorithms provided by programs such as the WGS-Assembler.

[1] [2] [3] 下一页